At Techriv, we don't just write code — we create solutions that drive real results, standing with you from day one to long-term success. By understanding your unique challenges, we deliver digital assets that look sharper, convert better, and scale with your ambitions seamlessly.

Premium

web solutions for your business

Premium

web solutions for your business

All your business needs: brand, design, development, and SEO that put you ahead of competitors

5.0

Loved by businesses

Portfolio

Techriv clearly understands how modern digital products should be built. Their approach was structured, thoughtful, and focused on real outcomes rather than surface-level design. The collaboration felt smooth, and the final result aligned perfectly with our product vision

Berhan Polat

Founder, Multiplechat

Confidential

“I checked his portfolio and there was just a click! I just saw in his work the vision and design I wanted to have for my (very bad) hand made draft!The communication was very clear, simple. His English is perfect and there was neve...”

Steven

Founder, Voiiice

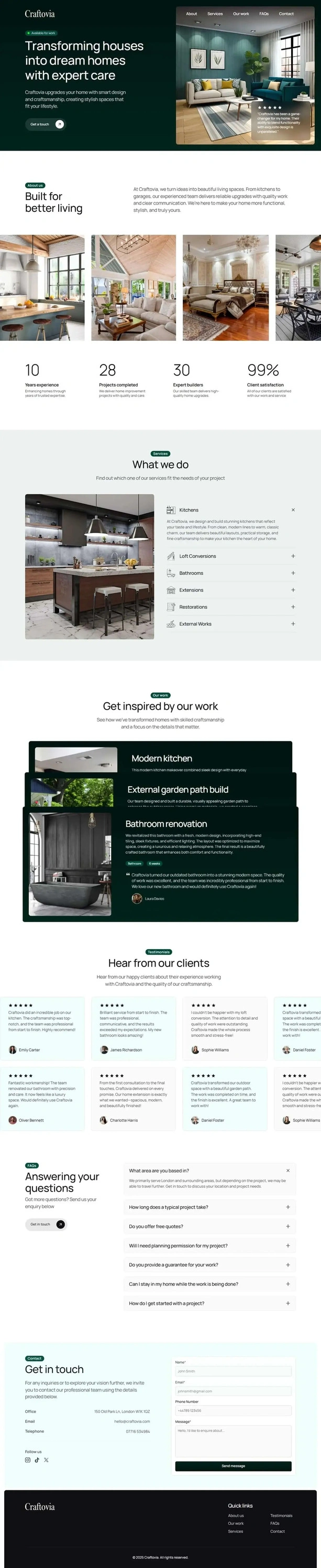

Built a modern, user-centric platform with an emphasis on clarity, speed, and seamless interaction. The work balanced design precision with robust engineering to support real-world use cases.

Riaz Shageer

Founder, RS, USA

“I checked his portfolio and there was just a click! I just saw in his work the vision and design I wanted to have for my (very bad) hand made draft!The communication was very clear, simple. His English is perfect and there was neve...”

Steven

Founder, Voiiice

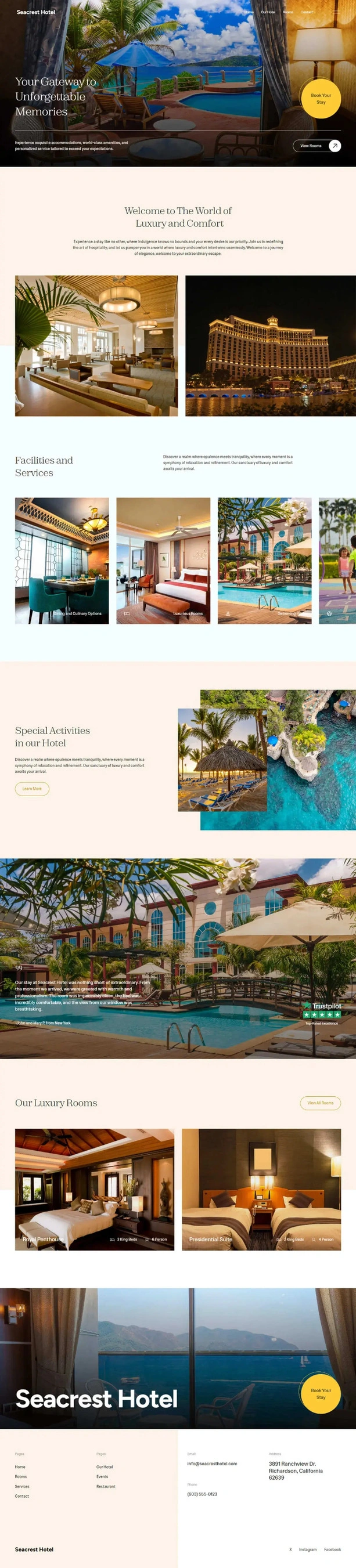

UI/UX Design

Create intuitive, user-centered designs that drive engagement and boost conversions. Our research-driven process crafts seamless experiences and stunning interfaces that connect with your audience and transform visitors into loyal customers.

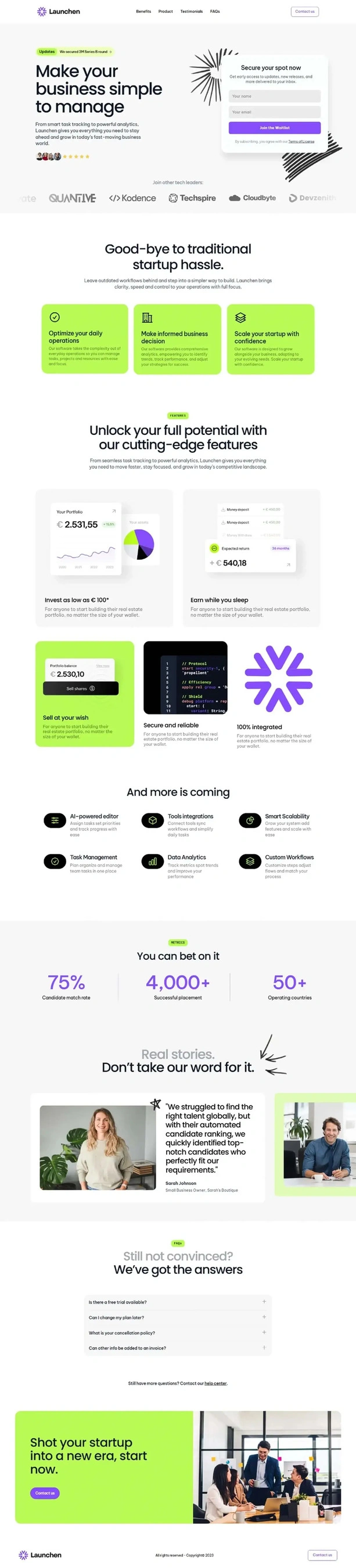

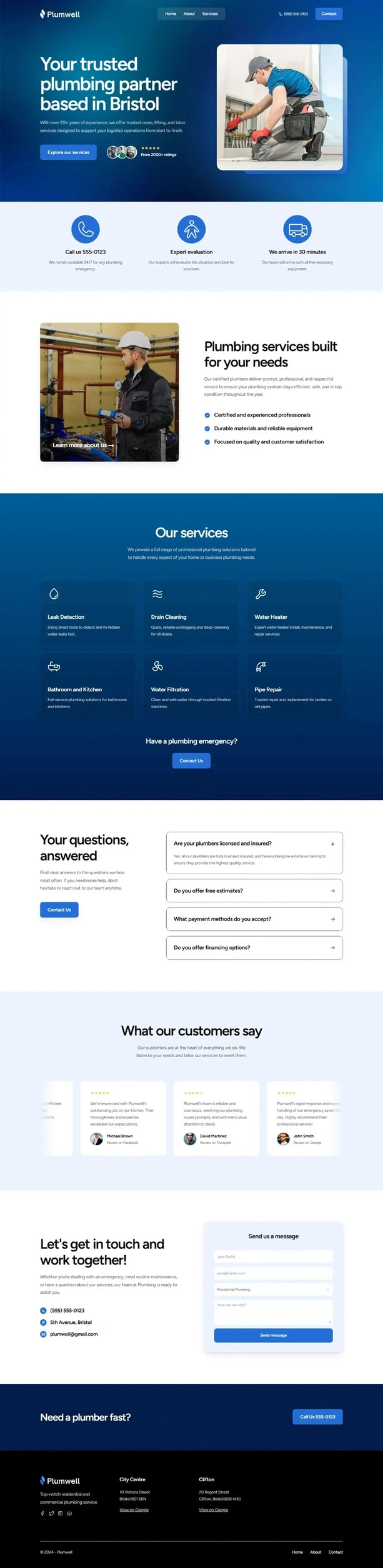

Web Development

Build powerful, scalable websites that convert visitors into customers. Our cutting-edge development approach ensures fast loading, mobile-responsive sites that drive growth and deliver exceptional user experiences across all devices.

No Code/Low Code

Build powerful applications without extensive coding using modern no-code platforms. We create custom solutions rapidly and cost-effectively, enabling faster time-to-market while maintaining professional quality and seamless functionality for your business needs.

MVP

Launch your startup idea faster with lean, focused product development that validates market demand. We build essential features first, gather user feedback quickly, and iterate smartly to maximize your chances of product-market fit success.

SEO

Dominate search rankings and attract qualified organic traffic that converts into loyal customers. Our proven SEO strategies increase your website's visibility, authority, and search performance while delivering sustainable long-term growth results.

Real results. Proven experience

Stacks

that scale.

Beyond expectations

Deadlines we actually keep

Design with purpose

Pixel-perfect obsessed

Regular updates

Not your ordinary agency

We stick around

Design with purpose

Results over promises

Uniquely yours

Innovation meets execution

Your problem

is ours.

Let's

talk about it.

Schedule a Call with

Adil Aijaz

or email us at